Artificial intelligence holds immense promise for medicine, communication, and global progress. However, fraudsters have quickly adapted this same technology for darker purposes. Among the most disturbing developments is the rise of deepfake medical scams that exploit trust and target seniors across the world. These scams are no longer local problems. They are international threats that combine false medical claims, cloned voices, and fabricated authority.

Artificial intelligence holds immense promise for medicine, communication, and global progress. However, fraudsters have quickly adapted this same technology for darker purposes. Among the most disturbing developments is the rise of deepfake medical scams that exploit trust and target seniors across the world. These scams are no longer local problems. They are international threats that combine false medical claims, cloned voices, and fabricated authority.

📰 How Deepfakes Are Used in Medical Fraud

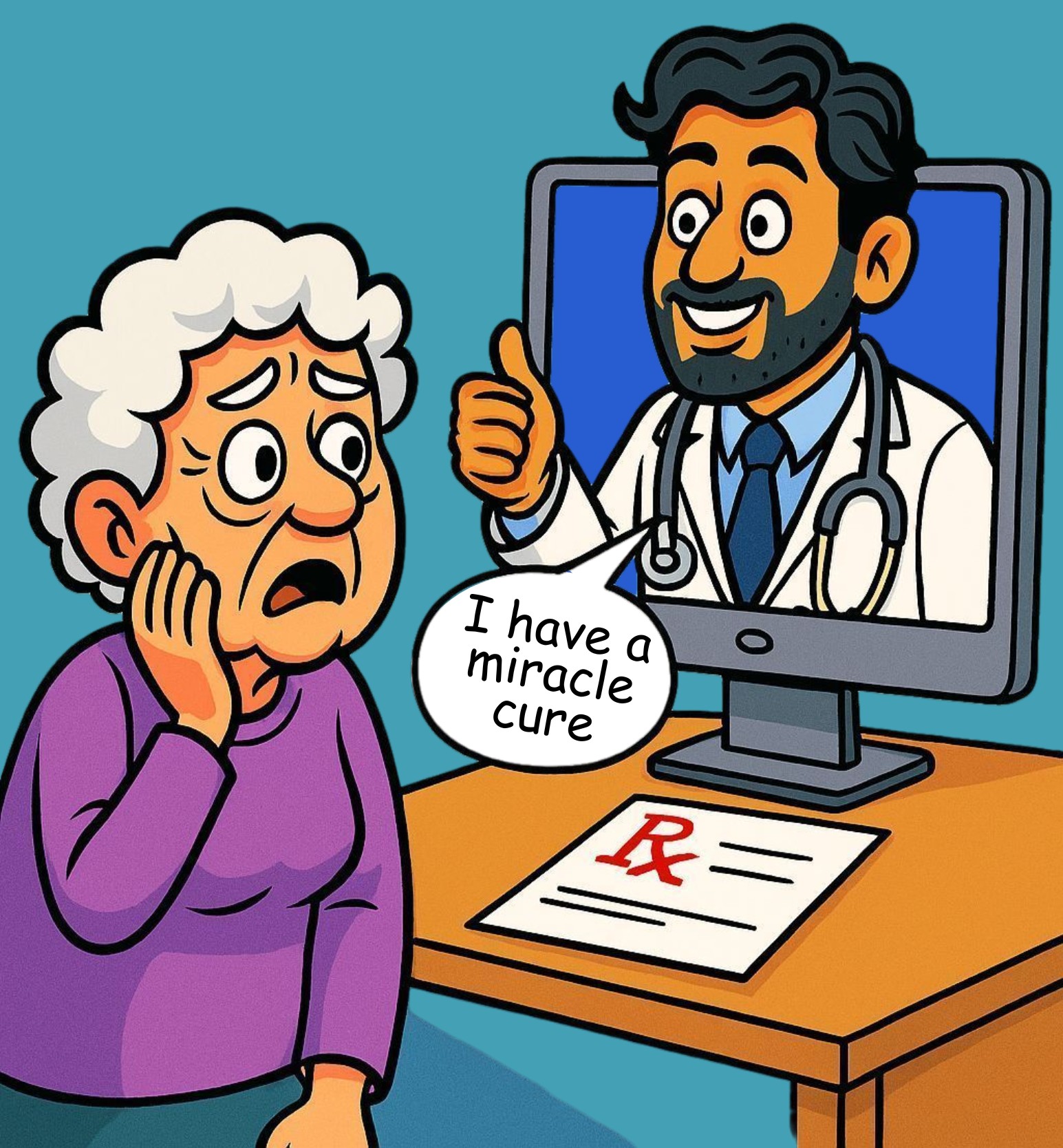

Medical scams have long existed, but deepfake technology has given fraudsters tools that are more convincing than anything seen before. Seniors are being deceived by fake endorsements, manipulated news broadcasts, and even cloned voices of loved ones.

🇨🇦 Canada: A fraudulent advertisement for a supplement called Blood Balance Plus used the logo of CBC News and fabricated doctors. The claim was that it could reverse type 2 diabetes in fourteen days. These ads often show up in the sponsored section of legitimate news stories as sponsored content from ad providers like Outbrain, Google and Taboola, even Facebook is filled with paid scammer ad content

🇺🇸 United States: Seniors have received urgent phone calls from supposed grandchildren in medical or legal distress. The voices sounded real but were generated through artificial intelligence.

🇬🇧 United Kingdom: Fake celebrity endorsements have appeared in online advertisements for miracle arthritis cures, shared widely on social media platforms.

🇦🇺 Australia: Deepfake video clips of well-known personalities have been used to promote unproven pills and alternative treatments.

🇮🇳 India: Authorities have reported fraudulent medical consults where fake doctors appear in video calls, using synthetic images and audio to demand upfront payment for treatment that does not exist.

These scams adapt to local languages and cultural contexts, but the strategy is always the same: impersonate trusted figures and push seniors toward financial loss or dangerous health choices.

👵 Why Seniors Are Especially Vulnerable

Seniors remain the most frequent victims of deepfake medical fraud for several reasons:

🎯 Trust in authority: Older adults are more inclined to believe established institutions, respected doctors, or familiar news sources. Deepfakes that impersonate these voices appear highly credible.

💊 Health concerns: Chronic illnesses and the desire for relief make miracle cure offers particularly tempting.

💻 Digital unfamiliarity: Many seniors are less familiar with synthetic media and cannot easily detect unnatural visuals or audio inconsistencies.

🏠 Isolation: Seniors who live alone often rely heavily on digital messages or phone calls, which makes them more susceptible to manipulation.

The outcome is often not only financial loss but also delayed or abandoned legitimate treatment.

💸 The Global Impact

The financial and health consequences are growing worldwide:

🇺🇸 United States: The Federal Trade Commission has reported billions in annual losses among older adults, with deepfake scams representing an increasing share.

🇪🇺 Europe: Manipulated media is recognized as a serious and expanding fraud risk, with significant financial harm to individuals and institutions.

🇨🇳 China: Regulators are tracking the rise of deepfake investment and medical scams that circulate on popular messaging platforms.

🌎 Latin America: Law enforcement agencies are investigating fraudulent advertisements for cures that never existed, many of which feature digitally altered voices of doctors.

Beyond financial harm, the health consequences are severe. Seniors who trust a deepfake medical message may delay visiting their physician or may purchase unsafe products.

⚖️ Regulation and International Responses

Governments and global organizations are beginning to react, though progress is uneven:

🇺🇳 United Nations: The International Telecommunication Union has urged countries to adopt standards for detecting and labeling manipulated audiovisual content.

🇩🇰 Denmark: Proposed legislation seeks to criminalize the distribution of deepfake impersonations of voices and faces.

🇺🇸 United States: Existing fraud and identity theft laws are being applied to deepfake scams, but critics argue that more specific legal tools are necessary.

🇪🇺 European Union: New frameworks are under discussion to hold platforms accountable for hosting deceptive AI content.

Social media platforms themselves are under pressure to introduce stricter rules for advertisements and to clearly label AI-generated content.

🛡️ Protective Steps for Seniors and Families

Awareness remains the most effective immediate defense. Practical steps include:

🩺 Verify medical claims with official doctors or health agencies instead of relying on online advertisements

📞 Confirm the identity of callers using known numbers rather than numbers provided in suspicious calls

⏳ Pause before acting on urgent requests. Urgency is a tactic used to force hasty decisions

👀 Watch for signs of manipulation such as mismatched lip movements, strange lighting, or robotic tones

🫂 Promote open family discussions about scams so that seniors feel supported and confident in questioning suspicious messages

Intergenerational training programs and senior community workshops can further strengthen digital resilience.

Deepfake medical fraud is no longer an isolated issue. It is a global challenge that undermines financial security, public trust, and health outcomes. Seniors stand at the center of this crisis, but its ripple effects touch families, caregivers, and health systems everywhere.

To meet this challenge, the world must act in unison. Governments must strengthen laws, companies must improve detection and accountability, and families must remain vigilant. Above all, seniors need education and community support so that they can recognize the threat.

The technology that fuels these scams is powerful, but awareness remains more powerful. Seniors deserve safety, dignity, and truth in a digital world increasingly clouded by deception.

- Log in to post comments